The fundamental elements of deep neural networks involve various intertwining components, such as the input layer, hidden layers, output layer, and vast arrays of perceptrons, where each functions as a node.

These are coupled with weights, biases, and activation functions that all work collectively to make neural network learning possible and highly beneficial.

By connecting these elements into a layered structure, deep learning, a subsection of machine learning, can mimic the human brain’s processing patterns 🧠 to solve complex problems.

What role does the input layer play in deep neural networks?

The input layer in a deep neural network is where all the initial data for the network is inputted. It serves as the initial point of contact for the raw input data coming from outside sources.

It is essential because it is the foundation where each ‘neuron‘, or node, represents an individual input variable or feature from your dataset.

Once processed in the input layer, the data then moves to the hidden layers for further processing. The importance of the input layer is often underestimated. Still, it provides the base for every computational operation 💻 that follows in the neural network.

How do hidden layers contribute to the functionality of deep neural networks?

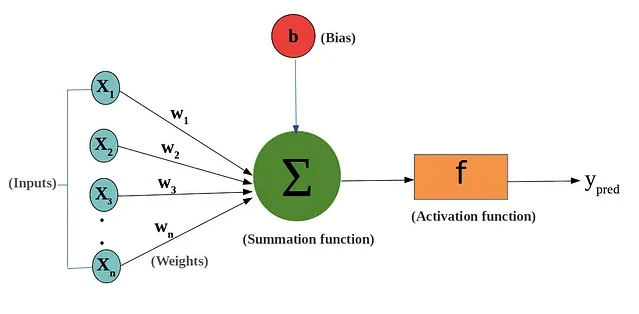

The role of hidden layers within deep neural networks lies primarily in their ability to perform transformations on data passed from the input layer. These layers, which sit between the input and output segments, contain neurons that apply a set of weights and biases to the data.

These processed data are then fed through an activation function which adds non-linearity to the model. This feature makes hidden layers crucial in enabling neural networks to learn from intricate patterns in data and solve complex tasks.

What is the significance of the output layer in deep neural networks?

The output layer in a deep neural network is the final layer where all previous computations culminate. It translates the complex information processed by the hidden layers into a form that is useful to the end user.

The number of nodes in this layer corresponds to the number of expected outputs. This layer applies the activation function to generate the final prediction. Keeping this in mind, the output layer serves as the conclusion of the data processing in a deep neural network.

Why are weights, biases, and activation functions important in deep neural networks?

Weights and biases in deep neural networks are parameters that the model learns from training data.

The model applies these weights to input and adds bias, transforming the input data within the model’s hidden layers. The flexibility of these values allows the neural network to adapt and learn via optimization techniques like gradient descent.

Meanwhile, activation functions play the pivotal role of introducing non-linear properties to the network.

The activation function, applied to the weighted sum of inputs and bias, controls the neuron’s output or firing strength. This component allows the network to learn from mistakes and improve for more accurate predictions.

Conclusion

A deep understanding of the constituents of deep neural networks – input layer, hidden layers, output layer, weights, biases, and activation functions is crucial for anyone willing to grapple with the complexities of generative AI.

Embedding these elements into a sophisticated mechanism, deep neural networks closely imitate the process of the human brain, enabling it to make complex decisions and remarkably accurate predictions, thereby simplifying complex problems in machine learning.

For a more detailed understanding, consider reading the pillar article “Fundamental Elements of a Deep Neural Network in Generative AI“.

- Remote Hiring in 2025 - April 5, 2025

- Burnout in Remote Teams: How It’s Draining Your Profits - January 27, 2025

- Signs You’re Understaffed - January 20, 2025