The CycleGAN is a type of generative adversarial network (GAN) that excels in image-to-image translation tasks where paired examples are not available.

It works by simultaneously training two separate GANs in a cycle: one GAN learns to translate an image from domain A to domain B, and another translates it back from domain B to A.

This cyclical process ensures that the generated images in both domains retain the fundamental structures and characteristics of the original images.

What are the key components of CycleGAN?

CycleGAN consists of two generators and two discriminators.

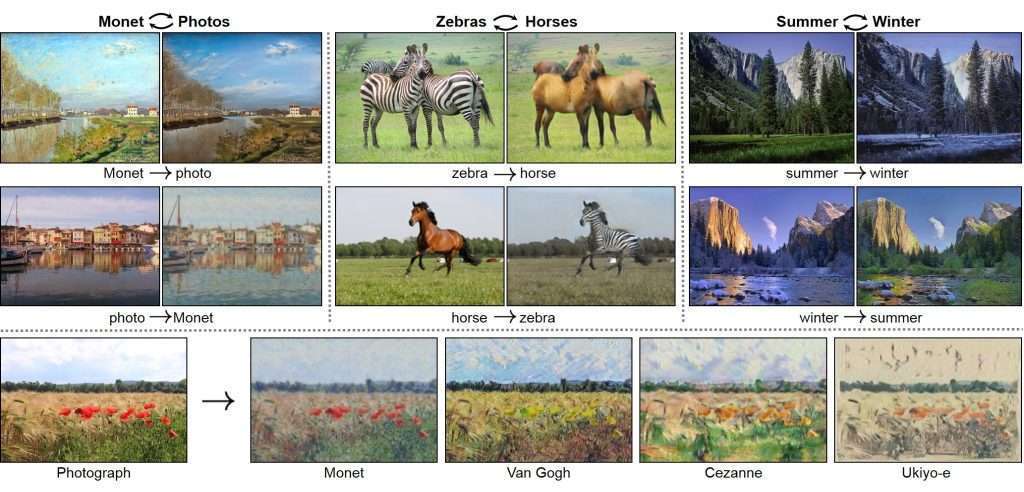

The generators are responsible for transforming images from one domain to another and vice versa. For instance, one generator will transform a zebra into a horse, while the other does the opposite.

Each generator has a corresponding discriminator, which serves to judge if the generated images are indistinguishable from real images within that domain. This setup aims to refine the generators’ capabilities such that the translated images look as authentic as possible.

The architecture also features loss functions that play crucial roles. The adversarial loss ensures that translated images are realistic, while the cycle consistency loss ensures that an image can be translated back to its original form.

This dual approach allows CycleGAN to produce high-quality translations even without paired training samples.

How does CycleGAN maintain image consistency across domains?

Cycle consistency is one of the distinct features of CycleGAN. It means that if an image from domain A is translated to domain B, and then translated back to A, it should closely resemble the original image in domain A.

This is achieved through the cycle consistency loss, which effectively acts as a regularizer to enforce this property. Simply put, it penalizes the network if the initial input and the cycled image are too dissimilar, guiding the generators to preserve key attributes across translations.

The cycle consistency loss is a component of the total loss function, and it gives the model a way to ‘self-correct’ over the training process, eliminating the need for paired data.

This unique feature is what allows CycleGAN to learn from unpaired datasets, making it highly versatile and adaptable for varied image translation tasks.

What kinds of problems can CycleGAN address?

CycleGAN has wide applications in different fields that require domain translation without paired examples.

A common practical use is in style transfer, where the aim is to apply the style of one image (such as a painting) to the content of another (like a photograph), as discussed in our pillar article on ‘CycleGAN: From Paintings to Photos and Beyond‘. This can be beneficial for content creators who want to generate novel visuals without having to manually edit images.

In addition to art, CycleGAN can simulate daylight changes in photographs for training autonomous vehicles, enhance satellite imagery, and perform facial attribute altering, among others.

This ability to adapt to different content without direct examples makes it an invaluable tool in the arsenal of generative AI.

What are the limitations and future potentials of CycleGAN?

Despite its impressive abilities, CycleGAN does have limitations. It can sometimes struggle with complex transformations or high-resolution images, leading to artifacts or implausible results.

There are also computational challenges, as training CycleGANs requires significant GPU power and time. Developing more efficient architectures or using transfer learning are potential ways to address these issues.

Looking forward, there could be significant advancements in CycleGAN technology. Improvements in the network architecture could lead to faster and more accurate translations.

There’s also exciting potential in integrating other AI technologies, such as reinforcement learning, to further enhance CycleGAN’s capabilities. These developments could open up new avenues for creative and practical applications in content creation, simulation, and beyond.

Conclusion

CycleGAN operates as a game-changing framework in generative AI by enabling high-quality image-to-image translations without the need for paired examples. It achieves this through its dual-GAN structure, use of loss functions like adversarial and cycle consistency losses, and a self-corrective training method.

While there are challenges in terms of complexity and computational requirements, the future for CycleGAN holds significant promise for advancements in AI-driven content creation and other innovative applications.

In grasping the functions of CycleGAN, startups, and medium enterprises stand to significantly streamline their content generation processes and foster new creative avenues.

- The Agentic Startup Manifesto - June 8, 2025

- Remote Hiring in 2025 - April 5, 2025

- Burnout in Remote Teams: How It’s Draining Your Profits - January 27, 2025